Generating Open-Ended Questions from News Articles

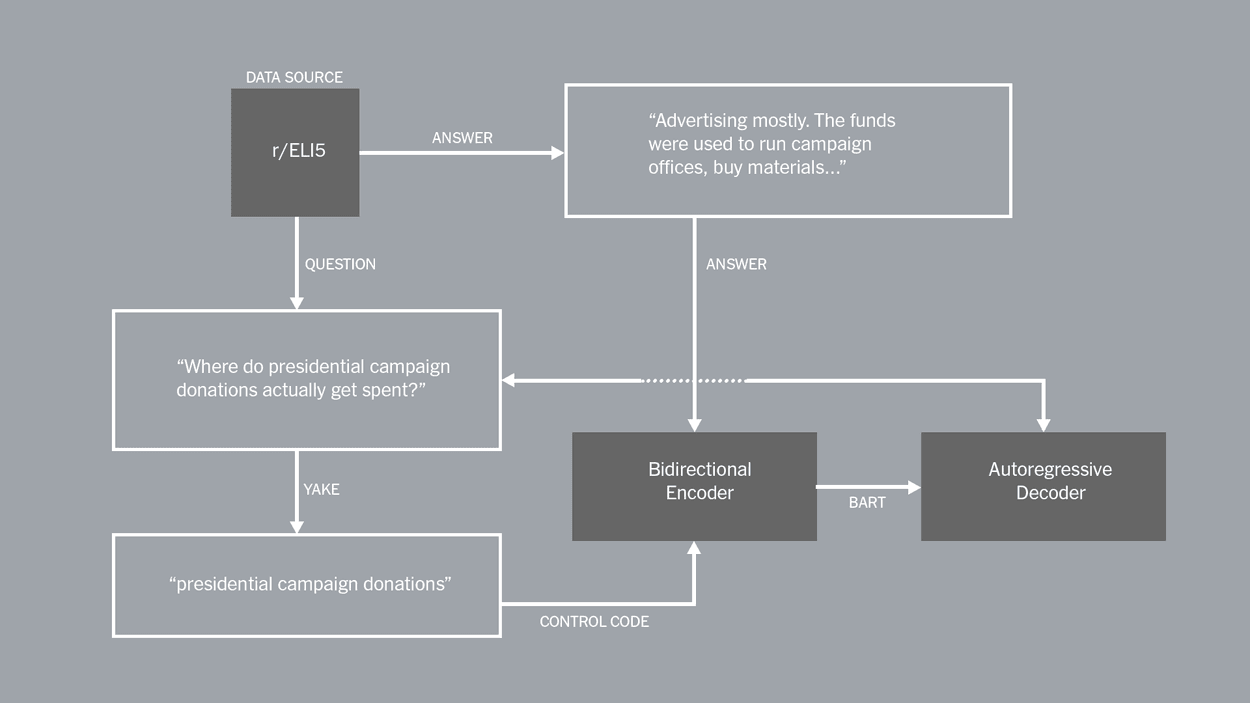

An example of our training method for Competent.

Over the past several years, a key focus for R&D has been understanding how advances in machine learning can extend the capabilities of journalists and unlock reader experiences that aren’t possible today.

Questions and answers are central to how humans learn. Times journalism frequently uses FAQ and Q&A-style articles to help readers understand complex topics like the Covid-19 vaccines.

To enhance this style of journalism, we have experimented with large language models to match questions to answers, even if the reader asks their question in a novel way.

Last year we launched a new research effort to explore generating open-ended questions for news articles. Our hypothesis is that understanding the questions our news articles are implicitly answering may be helpful in the reporting process, and may ultimately enable us to create FAQ and Q&A-style articles more efficiently.

An inference diagram of our method, Competent.

Our Approach

As a result of this exploration, we developed a tool we’ve named Consistent. Consistent is an end-to-end system for generating complex, open-ended questions that are both faithful to, and answerable from, the given article input text.

At a high level, our approach is based on a BART-large model that is fine-turned on the ELI5 dataset of question-answer pairs. We experimented with several language models and training methods, but from our testing this combination worked best. Around the model, we built a set of pre- and post-processing steps (seen above in our inference diagram) that yield strong results over other documented question generation techniques. We evaluated our work using both automatic and human-based techniques.

If you want to read more about our approach, you can read our paper in full here. The paper was accepted to the EMNLP conference and will be presented the second week of December.

{ "id": 20, "domain": "nyregion.txt", "paragraph": "Sleepaway camps across the United States experienced financial losses last year after government regulations prompted by the pandemic mostly kept them closed. Rules vary by state this year, but many camps will be allowed to reopen with social-distancing guidelines in place. Gov. Andrew M. Cuomo of New York has indicated that camps in the state will be able to open this year, but he has not provided clear guidelines for how they will do so yet.", "Consistent": "What happened to the sleepaway camps that were closed during the pandemic?", "leadsentencetoquestion": "What happened to the sleepaway camps?", "squadquestion": "What type of camps were closed due to the pandemic?", "randomkeyword": "States experienced financial losses last year after pandemic. Will sleepaway camps be allowed to reopen this year?", "bart": "How will the pandemic affect sleepaway camps?", "nonfactual": "How is Letitia James able to open a sleepaway camp in New York City after the pandemic?" },

An example of our schema, generated from “These Y.M.C.A. Camps Served Children for 100 Years. Now They Are Shut.”

Why generate questions?

Up until now, most NLP research in this domain has focused on the ability to generate factoid questions, or questions with simple, short answers. These are the types of questions that you might simply Google or search Wikipedia for, for instance: “What is Covid-19?”

Open-ended questions, however, are more complicated, and require longer, more complex answers. “What is the most granular picture of the city’s vaccination effort to date?,” “Why are people with disabilities being targeted for layoffs so much more than other workers?” and “What are the prosecutors looking for from the Trump Organization?” for example. These types of questions are often why readers look to Times journalism, and thus represent an important area for us to explore.

We think there are many compelling potential use cases for this work, including:

- Publishing news-based FAQs. The ability to automatically generate questions about a given topic could make it easier to launch an FAQ page for a new topic.

- Anticipating Reader Needs. When reading a news article, a recommended article interface could be presented with a series of questions related to topics mentioned in the article and links to news articles containing the answers.

- Improving news search experience. An improved news search experience could match questions with answers embedded in news articles.

About This Work

In 2021, R&D launched a post doctoral fellowship in collaboration with the New York City Media Lab to deepen our explorations into natural language processing. That fellowship led to the creation of this work in conjunction with Tuhin Chakrabarty, a PhD candidate in Computer Science at Columbia University.

Want to learn more about our research into natural language processing? Read our previous work about keeping humans in the loop, sourcing data from Mechanical Turk, and training with zero-shot learning.

If you’re working on similar problems or are interested in working together, reach out to rd@nytimes.com.